Reparations for immaterial damage under the GDPR: A new context

Written by Giorgos Arsenis*

A court in Austria sentenced a company to 800 Euros of compensation-payment towards a data-subject, for reasons of immaterial (emotional) harm, according to article 82 of the GDPR (General Data Protection Regulation). The verdict is not in force yet, since both parties have appealed the decision, but in case the verdict will remain unchanged in the second instance, then the company might be facing a mass lawsuit, where about 2 million data-subjects are involved.

The case has gained momentum since its outcome will constitute a legal paradigm, upon which future cases will be based. But let’s take a step back and have a broader look at this verdict and the consequences this application of article 82 might have towards the justice systems of other members of the European Union.

Profiling

The fact that a Post Office gathers and saves personal data of its customers is nothing new. But after a data-subject’s request, it was revealed that Austria’s Post, allegedly, evaluated and stored data that concerned the political preferences of approximately 2 million of its clients.

The said company used statistical methods such as profiling, aiming to estimate the level of affinity of a person towards an Austrian political party (e.g. significant possibility of affinity for party A, insignificant possibility of affinity for party B). According to media, it appears that none of the customers had provided their consent for this processing activity and in certain cases that information was acquired by further entities.

Immaterial harm has a price

The local court of Feldkirch in Voralberg, a confederate state of Austria bordering with Lichtenstein, where the hearing took place in the first instance, ruled that the sheer feeling of distress sensed by the claimant due to the profiling he was subjected to without his consent, constitutes immaterial harm. Therefore, the accuser was awarded 800 Euros, from the 2.500 Euros he claimed initially.

The court acknowledged that the political beliefs of a person are a special category of personal data, according to article 9 of GDPR. However, it also acknowledged that every situation perceived as unfavorable treatment, cannot give rise for compensation claims based on moral damages. Nevertheless, the court concluded that in this case, fundamental rights of the data-subject had been violated.

The calculation of the compensation was based on a method that applies in Austria. In line with that method, the court took two main elements into account: (1) that political opinions are an especially sensitive category of personal data and (2) that the processing activity was conducted without the awareness of the data-subject.

And now?

The verdict is no surprise. Article 82 § 1 of the GDPR clearly foresees compensation payment for immaterial harm. However, with 2,2 million data-subjects affected from this processing activity and simply by doing the math, what derives is the amount of 1,7 billion Euros. Certain is, that if the court of appeal confirms the decision, there will be a plethora of similar cases for litigation. This is the reason why already, in neighbouring Germany, many companies specialize in cases like this.

The Independent Authority

After the decision of the local court in Feldkirch in the beginning of October 2019, towards the end of the same month (29.10.2019) the Austrian Data Protection Authority (Österreichische Datenschutzbehörde), announced that an administrative sanction of 18 million Euros was imposed to the Austrian Postal Service. Beyond political beliefs, the independent authority detected more violations. Via further processing, evidence about the frequency of package deliveries or residence change were obtained, which were used as means for direct-marketing advertisement. The Austrian Postal Service, which by half belongs to the state, reported that it will take legal action against this administrative measure and justified the purpose of the processing activities as legitimate market analysis.

What makes the verdict distinctive

The verdict in Feldkirch shows that the courts are able to impose fines for certain “adversities” caused by real or hypothetical violations of personal data.

Unlike the independent authority, that imposed the administrative sanction due to multiple violations of the GDPR-clauses, the local court in Feldkirch focused on the ‘disturbance’ sensed by the complainant.

The complainant simply stated that he ‘felt disturbed’ for what happened, i.e. without pleading a moral damage resulting from the processing activity, such as defamation, copyright abuse or harassment by phone calls or emails. The moral damage was induced by the fact that a company is processing personal data in an unlawful manner.

You can find the decision here.

* Giorgos Arsenis is an IT Consultant και DPO. He has long-standing experience in IT Systems Implementation & Maintenance, in a number of countries in Europe. He has been active for agencies and institutions of the EU and in the private sector. He is qualified in servers, networks, scientific modelling and virtual machine environments. Freelancer, specializes on Information Security Management Systems and Personal Data Protection.

Digital Sources:

Interview with the Senior Director Government Affairs of Symantec, Ilias Chantzos

His title merely impresses: “Senior Director Government Affairs EMEA and APJ, Global CIP and Privacy Advisor” for Symantec, a leader company in the Cybersecurity sector.

In other words, Mr Ilias Chantzos is the person responsible for the intergovernmental relations of Symantec for almost every state of the globe (apart from America), regarding Cybersecurity and data protection issues. Symantec, is one of the leading companies of Cyber Security software worldwide, with hundreds of millions users.

After all, who is not familiar with ‘Norton Internet Security’, Symantec’s most popular and No 1 product for customer protection?

Our first contact was at Data Privacy & Protection Conference where he vividly presented the topic of security breaches and the notification of such breaches. We kindly asked him to share his views on the contemporary developments on the sector as well as the role of NGOs. Despite his busy schedule, he ardently accepted our invitation. We thank him thus, for this extremely interesting interview.

In Greece, entire generations have been brought up in the framework of ‘Rightsism’ and ‘politically correctness’ Τhe crisis we experience is both economical as well as moral.

– HD:The implementation of GDPR and NIS renders Europe as a pioneer in the creation of an integrated, prescriptive setting for Cybersecurity and data protection. What are the next steps?

IC: Initially, the first step is the full implementation of GDPR. And this will become viable through the adaptation of individual rules, such as the guidelines set by the European Data Protection Board (EBPB), the imposition of fines functioning as impediments to the non abidant organisations and through solving issues arising from data transmission, especially to America. The latter acting as a sticking point to mutual interests of great, private companies.Then, adequacy decision with other countries, such as Korea will follow, which will eventually create a great secure flow space and, of course, the final decisions regarding e-privacy Regulation.

-HD: On that occasion, let me ask you about the efforts and the enormous funds that are allegedly spent within lobbying settings from giants in the technology sector such as Google and Apple on favorable e-privacy conformation towards them.

ΙC: Well, isn’t it reasonable for the companies to be interested about rules that concern and directly regulate them? The industry’s interests are not common, rather than different and dissenter. If, for example, a regulatory context is favorable for company X, the same context will be less favorable for company Y which operates in a similar but not the same sector. The same happens with e-privacy.

Companies are ‘fighting’ each other because their interests are not common. Ιn Greece there is neither the conscience nor the full picture of the entrepreneurship interest due to the demonising of profit and entrepreneurship that emerges from the past’s ideological stiffness. We should not face the industry as a caricature of a bad capitalist, but realistically through the prism of complicated relations and existing interests. Τhis is the only way that bodies will perform correctly. Let’s give an example that everyone in Greece will easily understand. The legislature regarding dual tanks in sea-going tankers is supposed to protect the environment from oil leaks. This type of legislature is supported by environmental NGOs and shipyards (an industry that mostly pollutes. . . Can you spot the paradox already?) because it can be translated into brand new orders. Ιt will be supported by the coastal states of European Union but it is not useful to Greece (which has the greatest coastline and tremendous tourism), which has mostly sea-going shipping since it augments its costs while having zero income from its shipping.

Can you spot how many contradictions there are in one simple example and we haven’t even discussed about local communities that have suffered sea contamination and the tourism industry.

-HD: You mentioned fine imposition earlier. Recently, we watched huge companies such as Google, British Airways and Marriott being imposed tremendous fines leaving everyone believing that no one is immune within the Cybersecurity and protection of privacy sector. Thus, if the ultimate protection and secure processing of personal data is impossible, then what is at stake here? Why all this is happening?

IC: In the companies that you mentioned, fines were imposed for different reasons. Regarding the Google case, fines were imposed for lawfulness of data processing , and more specifically their collection and processing, whereas in Marriott and British Airlines cases fines were imposed due to restricted data protection measures. There is no absolute security to anything in life, the same stands for security. The authorities though, did evaluate that those companies should have protected data much more attentively. Unfortunately, that was not applied this way and this is the reason that fines were imposed, indicating that privacy protection is a top priority.

-HD: In Greece, why do you believe that fines are not equally high?

IC:There are many factors implicated.Up to date greek companies invested in highly essentials. In state of economic crisis you do what is necessary to ensure smooth operation. Current fines are calling for the national companies which want to sell products and services abroad to answer a critical question that every foreign client will ask: “ Can you protect my personal data effectively? ”. Ι understand that small and medium sized enterprises comprehend security mainly as a cost. It is like car insurance which you may never use.

Nevertheless, security can become a competitive advantage. Even if we are kind of left behind, middle sized enterprise should keep up and improve its products and services quality. Quality will make you competitive. I understand that this quality might increase your cost but you belong in the European Union. You have to play according to these rules!

-HD: How do you perceive the NGOs role in this sector? What would you advise an organisation such as Homo Digitalis in order to make their action more effective?

Do not act as ‘rightsists’. In Greece, entire generations have been brought up in the framework of ‘Rightsism’ and ‘politically correctness’ Τhe crisis we experience is both economical as well as moral.This, of course, does not mean we have to stop fighting for our rights. We ought though, with every enquiry that we make to be well informed of its losses and its gains.Which are the consequences of our choices. Not blindly ask just because we can.

It’s the so called ‘occassional cost’. Namely, you should be informed as far as possible which are the other options, that you rejected, before the finally chosen. It is not possible, for example, based on the current business model, to ask for free internet without accepting advertisements (it should be noted that I do not like them).

You don’t like advertisements? No problem, can you afford to pay for the service you receive or to ensure the share of privacy you want? Ιt’s not enough to ask. You also have obligations. Unfortunately, we are victims of the trend “I need X at all costs”, without having thought what we lose or what we accept. It is indicator of maturity and resistance to populism to be able to distinguish easy rightsism from the one that is really in our interest. This is the biggest challenge in my opinion for all NGOs.

Getting closer to the vote on the proposed European Directive on Copyright

Next week is very important for the future of the internet; particularly for freedom of expression and information and the protection of privacy.

On Tuesday 26th of March, the European Parliament will vote on the proposed European Directive on Copyright. In the past we have hosted on our website detailed articles on this specific legislative reformation.

This proposed Directive introduces certain provisions which have a positive nature. However, some other of its provisions raise significant problems; the most important is Article 13, which, during the vote will constitute Article 17, as the text has been renumbered.

Article 13 is supposed to be set up to improve the existing situation and help the creators enjoy intellectual property rights on their works. However, it fails to achieve its scope and mostly assists the interests of major record, publishing and film companies and the interests of companies, which develop software for content control (content-control / filtering software).

Article 13 also does not eliminate, in any case, the serious pathogenesis of the existing regime. Specifically, it lacks a specific provision for combating false claims related to intellectual property rights, while its general, badly written and unclear provisions would lead to a slew of preliminary rulings to the Court of Justice of the European Union.

Article 13 causes new problems and is unable to ensure a reasonable balance between, on the one hand, the right to the protection of intellectual property and on the other hand the rights to freedom of expression, information and respect of personal privacy. Each type of material, which users of the internet upload on platforms of content exchange, as photos, texts, personal messages, videos, etc. will be subject to control and will not be published to the effect that the filtering software deems that there is an infringement.

Numerous experts have been sounding the alarm about the consequences of Article 13 and the uploading filters for Freedom of Expression and Information on the Internet and its diversity:

– The United Nations Special Rapporteur on Freedom of Expression and Information, David Kaye, has substantially expressed his contradiction to Article 13.

– The Federal Commissioner for Personal Data Protection of Germany, Ulrich Kelber, has clearly stressed that Article 13 leads to a tremendous growth of giants of online services and creates challenges for the protection of privacy on the Internet.

– The Minister for Justice and Citizen’s Protection of Germany, Katerina Barley, has invited German Members of the European Parliament to vote down Article 13.

– 169 specialised and prominent academics in the field of intellectual property, have underlined that the provisions of Article 13 are misleading.

Furthermore, the number of Members of the European Parliament committing to vote down article 13 in the vote of Tuesday is constantly rising!

MEPs, which are independent or represent European political parties from every political field, as the Greens/European Free Alliance (GREENS/EFA), the European United Left (GUE/NGL), the European People’s Party (EPP), the Progressive Alliance of Socialists and Democrats (S&D) and the Alliance of Liberals and Democrats (ALDE) have already openly expressed their opposition to Article 13.

Finally, many people are expressing their dissatisfaction with Article 13 and its very serious effects. More than 100,000 people have participated in the demonstrations that took place in many European countries on Saturday 23 March.

Call your representatives in the few days left!

Ask them to vote down Article 13!

Free and open Internet needs you!

There are two days left until the last vote in the European Parliament (possibly on 26.03.2019), which will be crucial for the future of the Internet. More than one hundred Members of the European Parliament (of which the only Greeks are Ms. Sophia Sakorafa and Mr. Nikolaos Chountis) have already committed to vote down Article 13, while citizens in more than 23 cities in Member States of the European Union raise their voices in demonstrations against Article 13.

Many European citizens have participated until now in the campaign Pledge 2019. Since the end of February, when the campaign started, more than 1200 calls and 72 hours of discussion have been carried out. The specific numbers are unprecedented for such a short period of time and conclusively prove people’s active interest and their will to be part of the political debate, when the step is given. Also, citizens in this way express their disappointment about unfounded accusations that many emails and tweets sent the previous period to MEPs have been actions of fake automated accounts!

Numerous experts have been sounding the alarm about the consequences of Article 13 and the uploading filters for Freedom of Expression and Information on the Internet and its diversity:

– The United Nations Special Rapporteur on Freedom of Expression and Information, David Kaye, has substantially expressed his contradiction to Article 13.

– The Federal Commissioner for Personal Data Protection of Germany, Ulrich Kelber, has clearly stressed that Article 13 leads to a tremendous growth of giants of online services and creates challenges for the protection of privacy on the Internet.

– The Minister for Justice and Citizen’s Protection of Germany, Katerina Barley, has invited German Members of the European Parliament to vote down Article 13.

– 169 specialised and prominent academics in the field of intellectual property, have underlined that the provisions of Article 13 are misleading.

– The International Federation of Journalists (IFJ) has invited European legislators to improve the provisions and put the necessary balance between the Protection of Intellectual Property and the Protection of Freedom of the Expression and Information.

– Sir Tim (Tim Berners-Lee), one of the creators of World Wide Web has openly expressed his contradiction to Article 13 and the risks caused to open Internet.

Nobody can claim that he/she didn’t know the significant negative effects of Article 13! We should act and persuade MEP’s to set aside the several political interests and defend European citizens’ rights!

Finally, many people are expressing their dissatisfaction with Article 13 and its very serious effects. More than 100,000 people have participated in the demonstrations that took place in many European countries on Saturday 23 March.

Call your representatives in the few days left!

Ask them to vote down Article 13!

The interview of Irene Kamara

Irene Kamara is a PhD researcher at TILT and affiliate researcher at the Vrije Universiteit Brussel (LSTS). She follows a joint doctorate track supported by the Tilburg University and the Vrije Universiteit Brussel. Her PhD topic examines the interplay between standardisation and the regulation of the right to protection of personal data.

Prior to joining academia, Irene had been working as an attorney at law before the Court of Appeal in Athens. She also did traineeships at the European Data Protection Supervisor and the European Standardisation Organisations CEN and CENELEC. In 2016, she collaborated with the European Commission as external expert evaluator of H2020 proposals on societal security.

Irene is selected as a member of the ENISA Experts List for assisting in the implementation of the Annual ENISA Work Programme. In 2015, Irene received a best paper award and a young author recognition certificate from the International Telecommunications Union (ITU), the United Nations Agency for standardisation.

– Even though you had a great start at your professional career as a lawyer, you decided to follow a PhD. Your dedication to academic research has led to a successful path with many interesting publications. Looking back, how hard was to make this decision though, and what advice would you give to people facing this dilemma?

| Indeed, I was working for several years as an attorney at law before the Court of Appeals in Athens. Legal practice offered me valuable lessons, among others how different law in the books from law on the ground is, working efficiently under pressure, contact with clients, and task prioritisation. Those are lessons I still carry with me in my academic career.

I was always fascinated by data protection, privacy, confidentiality of information in electronic communications and I decided to take a career break for a year and follow the TILT’s master program on law and technology. I feel that as a practicing lawyer you always need to learn and evolve. Tilburg’s Law & Tech master program, as you know, offers courses on privacy & data protection, intellectual property, regulation of technologies, e-commerce and gives the student the opportunity to become an expert in cutting-edge topics. After the master, I was offered a researcher position at the Vrije Universiteit Brussel under the mentorship of prof. Paul De Hert. At VUB, I decided I wanted to do a PhD. I realised that via research you have the opportunity to reach out to a bigger audience than merely your clients as a legal practitioner, and adopt a pro-active approach to problem solving, than a re-active one which is often the case in legal practice. Of course what weighed in my decision was also the quality of the working environment and conditions and the high standards in research. Both TILT/Tilburg University and LSTS/VUB have been wonderful homes, allowing me to evolve and progress as a professional, by actively encouraging independent thinking and inter-disciplinary research. To colleagues facing such dilemma, I would say dare to take a risk and leave your comfort zone. Test yourself with a research visit at an institution, writing an academic paper and presenting it at a conference. And keep in mind that academic life is not an easy one, either. And my advice is to choose an academic institution and a mentor that recognises and appreciates your hard work. |

– You have participated as a speaker in panels at top level conferences and events all around the world. The Computers, Privacy, and Data Protection Conference at Brussels, and the IGLP Conference at Harvard Law School are some of them. How did all these experiences shape your research and why is it important for researchers to exchange thoughts with other experts in a global level?

| I believe that participation in conferences is a necessary component for every scholar. Not only for sharing and exchanging knowledge, but also for validating and enriching your research results, cross-fertilising ideas. I am against the old-school approach of researchers, especially PhDs, isolated in an office and writing up articles. While there is probably scientific theoretical value in the outcomes of such research as well, the result will most likely lack societal impact.

I usually select the conferences depending on the audience from which I would like to get feedback for my research. The IGLP Harvard conference last year was a great opportunity to expose my thoughts to a global law audience from literally all around the world. I met so many academics being interested in my research or working on complementary topics. IGLP stands exactly for this: investing in creating a stable network of people eager to exchange their ideas globally and assist each other. Besides such conferences, I am lucky that my home institutions, TILT and LSTS organise the annual CPDP conferences and the bi-annual TILTing conferences, to which I participate. Those are very good examples for broadening one’s research interests. |

– Your PhD topic examines the interplay between standardisation and the regulation of the right to protection of personal data. Tell us more about this field and the projects you are working on.

| My current research field is human rights with a focus on data protection and privacy, regulatory instruments such as standards, certifications, and codes of conduct, and new technologies. My PhD looks at how soft law, such as technical standards, interacts with human rights regulation, with a focus on personal data protection. While there is a visible regulatory sphere in regulating data protection, that is the Union’s secondary legislation, there is also a set of rules embedded in technical standards that is not so visible. Such rules might translate legal requirements to technical controls or prescribe a set of policies and behaviors to controllers and processors that go beyond the letter of the law.

I am researching this interplay and the various roles standardisation might play in regulating data protection. Other than my PhD project, an interesting project I have been working on as a principal researcher is the study on certification of Art. 42 and 43 of the General Data Protection Regulation for DG Justice & Consumers of the European Commission. Last year, I also collaborated with ENISA on a study on privacy standards. That was an exciting project. I worked together with standardisation experts, civil society and industry to produce the report. |

– You are member of various important organizations. One of them is the Netherlands Network for Human Rights Research (NNHRR). In the Netherlands, organizations, such as Bits of Freedom, and universities, such as the Tilburg University have an active role as regards the promotion and protection of Human Rights in the digital age. What do you think about the situation in Greece in this field? Can civil society organizations and academia, work together to push for positive outcomes?

| Although barely in the spotlight, the role of NGOs and academia is fundamental for defending societal interests.

There are also some exceptions like the recent CNIL fine on Google. As far as I am concerned, so far there hasn’t been a coordinated initiative fighting for digital rights in Greece. I see Homo Digitalis as an initiative aiming to fill this gap, by not only informing the broader audience through awareness raising campaigns but also flagging false and unfair practices, participating in public consultations, strategic litigation. |

– You have joint publications with great academics in the field, such as Paul de Hert and Eleni Kosta. If you could share an advice with young researchers reading this interview, what would that be?

| Paul and Eleni are both my PhD supervisors and have been great mentors in my academic career so far. I have certainly learned a lot from collaborating with them.

I would advise new researchers to share and exchange ideas with colleagues, take risks in exploring new fields, set goals and work hard to achieve them. And also very important: don’t be afraid to aim high. |

Social Engineering as a threat to Society

Written by Anastasios Arampatzis*

Social Engineering is defined as the psychological manipulation of human behaviour into people performing actions or divulging confidential information. It is a technique, which exploits our cognitive biases and our basic instincts, such as confidence, for the purpose of information gathering, fraud or system access. Social engineering is the “favourite” tool of cyber criminals and is now primarily used through social networking platforms.

Social Engineering in the context of cyber-security

The conduct of the staff has a significant impact on the level of an organisation’s cyber-security, that by extension means that social engineering is a major threat.

The way we train our staff in cyber-security, affects the cuber-security of our organisation, as such. Recognising staff’s cultural background of our company and planning their training in such a way that responds to various cognitive biases can aid to the establishment of an effective information’s security. The ultimate objective should be the development of a cyber-security culture within the meaning of attitude, notion, cognition and behaviour that contribute to protect sensitive and relevant information of an agency. A substantial part of cyber-security culture is the risk awareness of social engineering. If the officials do not consider themselves as part of this effort, then they disregard the security interest of the organisation.

Cognitive exploitation

The various techniques of social engineering are based on specific characteristics of the human decision-making process, which are known as cognitive biases. These biases are derivatives of the brain and the procedure of finding the easiest way possible to process information and take decisions in a swift. For example, a characteristic feature is the representativeness, the trend namely, to group related items or events. Each time we see a car, we do not have to remember the manufacturer or the colour. Our mind sees the object, the shape, the movement and indicates that this is a “car”. Social engineers exploit this characteristic through sending phishing messages. We receive a message with the logo of Amazon and we do not check if it is false or not. Our mind says that this is coming from Amazon, that we trust it and so we click the link and we give away our personal data, as our card number. Similar attacks aim to interception of confidential information for the staff, as i.e. manipulation, fraud by phone. If any person is not adequately trained to face such attacks, he will not even understand their existence.

Principles of Influence

Social engineering is largely based on the six principles of influence, as outlined in the book of Robert Cialdini “Influence: The Psychology of Persuasion” which briefly are:

-

- Reciprocity: obligation to give when you receive

- Consistency: looking for and asking for commitments that can be made

- Consensus: people will look to the actions of others to determine their own

- Authority: people will follow credible knowledgeable experts

- Liking: people prefer to say yes to those that they like

- Scarcity: people want more of those things there are less of

The scandal of Cambridge Analytica

After the election of the President Trump many media were discussing the possibility that social engineering strategies might have been used to influence public opinion. Revelations for Cambridge Analytica and the data’s use of users of Facebook does not only raise doubts as to data’s privacy and the lack of user’s consensus, but demonstrates the ease with which companies can plan and raise social-engineering campaigns against a whole society.

As for commercial advertisements, it is very important to know your target group, in order to reach your goal with the less possible effort. This is true for every influential campaign and what the scandal of Cambridge Analytica proved is that social engineering is not only a threat to cyber-security of a company or an agency.

Social engineering is a threat to political stability and the free and independent political dialogue. The advertising techniques used in social networking platforms raise many ethical dilemmas. Political manipulation and spreading misinformation and disinformation largely alleviate the existing moral issues.

The threat to Societies

Is it possible for social engineering to trigger a war or social unrest? Is it possible for foreigners to deceive citizens of a state in order to vote against their national interest? If a head of a State (I will not use the word leader) wants to manipulate his/her State’s citizens, can he/she succeed it? The answer to all these questions is yes. Social engineering through digital platforms, which have invaded every social structure is a very serious threat.

The fundamental idea of democracy is that the power is vested in the people and exercised directly by them. Citizens can express their opinions through an open, protected and free dialogue. Accountability, especially of government officials, but also individuals, is equally an important principle of democracy. Through the mass collection and exploitation of personal data with no accountability, these principles are endangered.

However, at this point it should be noted that it is not only social networking platforms to blame, such as Facebook, for any disinformation campaign or political manipulation. These platforms actually reflect our actions. We create our own sterile world, our “cycle of trust”. Therefore, the threat is not the means by themselves, even if they have a share of responsibility in their way of collecting data and advertising practices. The real threat are the devious ones and how they exploit these platforms.

Large-scale campaigns of social engineering, which are taking advantage of human trust, contaminate public dialogue with misinformation and distort reality and can pull societies back from the brink. The truth is doubted more than ever and political polarisation is increased. Spreading news on social media with no accountability leads to political distortion, lack of confidence in the political system and the election of extreme political parties. In brief, social engineering is a serious threat to social and political stability.

Response to the threat

The key to tackling social engineering, considering that tactics are aiming to lack of knowledge, to our unawareness and our prejudices, is awareness. The approach of raising awareness has dual effect: on the one hand we can develop strategies and good practices to confront social engineering as such, on the other hand we can develop policies to reduce the results of social engineering.

In contrast to what is happening in responding to malicious software, in order to address social engineering we cannot just “install” some kind of software to humans in order to stay safe. As Christopher Hadnagy notes in his book “Social Engineering, The Art of Human Hacking”, social engineering requires an holistic, people-focused approach, which will be focused on the following axes:

-

- Learning to recognise social engineering attacks

- Creation of a personalised program on cyber-security awareness

- Consciousness of the value of information searched by social engineers

- Constantly updated software

- Exercises through a simulation software and “serious” games (gamification)

Confrontation of social engineering should become part of a wider training of our digital security. To combat social engineering on a society level we should be trained for the vulnerability of modern means of communication (i.e. social media), for the reasons, why they can be used for people’s manipulation (i.e personalised advertising, political communication) and for the ways in which they are manipulated (i.e. fake news). Awareness is the key to develop critical thinking against social engineering.

*Anastasios Arampatzis is member of Homo Digitalis, demobilised Officer of Air Force with more than 25 years experience in relevant aspects of security of information. During his time in the Office of Air Force, he was certified NATO evaluator in cyber-security cases and has been honoured for his knowledge and his efficiency. Nowadays, he is a columnist on State of Security of Tripwire firm and for the blog of Venafi. His articles have been published in many well-respected websites.

Facebook and Google know almost everything about you!

Written by Nikodimos Kallideris

“Everyone is guilty of something or has something to conceal. One must only look hard enough to find what that is” (Aleksandr Solzhenitsyn).

Frankly, did you know that according to statistical surveys the account holders on Facebook are more than five million in Greece? Respectively, active accounts on Google are even numerous with intensive growth rates…

Have you noticed that the use of the extremely useful and responsive accommodating services of both platforms is offered without any payment? They are totally free -or not? After all, it seems that they are not! The two platforms, like many others, “fed” on your personal data, that you provide them with during their use. Our personal data have been named the “the oil of the 21st century”. Of course, you have previously given your consent to provide them to the platforms; but really, are you aware of the volume of your personal data, that are stored in their servers?

Let us first look at Facebook:

As a data subject, you have the right to access (GDPR Article 15) according to which you can make a request and get in return from the company (the data controller) everything they have stored that concerns you. You can exercise the right to access following the link: https://www.facebook.com/help/1701730696756992

Having submitted the request and anticipated the necessary time for its processing, you will receive a file, in which you will find: all personal written or audio messages you have sent, the exact time, the place and the device with which you were connected each time in your account, the applications used, your photos and videos and there is no end… All these from the outset of your account’s creation until today!

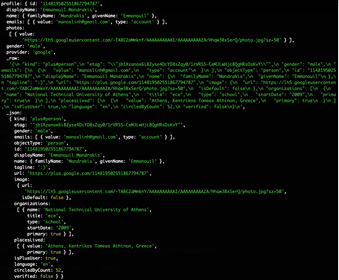

So, let us come now to Google:

If you have turned on GPS on your smartphone, Google records the history of every location you have been to, in conjunction with the period you stayed at each of them and the duration of the transition from one location to the other. Do you want to find this out on your own? Follow the link: https://www.google.com/maps/timeline?pb

You can also find easily your whole search history from every device even if you have deleted it (https://myactivity.google.com/myactivity) but also your search history on YouTube (https://www.youtube.com/feed/history/search_history).

Seek now to download on your computer every stored data by Google (https://takeout.google.com/settings/takeout). Do not be surprised by the volume of the file, which for that reason will may need several hours to be sent. It is likely to be several gigabytes in size, always depending on the frequency and the type of services’ use. In the file, you will find everything; from the deleted e-mails up to your navigation history in every detail, your calendar, the events you attended, your photos, purchases you made from Google and many others… Besides that, if you are connected in various platforms through your Google account (log in with Google) many of your sensitive personal data are recorded unintentionally, such as the place you are staying at, you are studying or working, the number of your friends on Google Plus, your gender, your name or the languages you speak. Any movement you make on the Internet has left in clear and indelible lettering its digital footprint even if you are not able to remember it right now.

Photo shows the data that a platform of electronic orders learnt for Manos Mandrakis, member of Homo Digitalis, when he connected to it using his Google account.

Having followed the above steps, do you feel slightly numb or terrified? Not surprising at all! You might feel “digitally naked” and that an invisible power, such as Jeremy Bentham’s narratives, is constantly recording your moves and can make extraordinarily important conclusions from them. Against you or for your benefit? It depends on the incentives of your data’s holder. In any event, Facebook and Google possess information that you have never shared even with your family or your best friend.

Bear that in mind! The modern digital world of information offers you improbable facilities but also countless risks. Only you can protect yourself! And if you repeat the familiar and hazardous naive “I have nothing to hide” I would urge you to deepen on the saying written in the upper part of the article.

Guidelines by Homo Digitalis in the context of the European Data Protection Day

January 28 has been established as the European Data Protection Day by the Council of Europe. Information society and the increasing use of the Internet lead to the growth of our digital footprint. Personal data constitute an endless bone of contention for the companies which base their corporate model on them.

Which are the challenges and what can you do to protect your personal data? Homo Digitalis, in the context of the European Data Protection Day, created a short video with guidelines to help you prevent potential violations, as well as ways to react should you feel that your rights have been violated.

Watch the video and get informed through our website!

The right to be forgotten

Written by Apollonia Ioannidou*

“What happens to the memory we do not recall? Can we preserve the past itself?’’ In these words, Proust, decades ago, described his anxiety about the things which are forgotten. Besides, it is undisputed that human memory is weak and cannot remember everything. Everyone suspects that there are things that we cannot recall: Even our own self is hiding in his experiences and cannot be solid and intact. The thymic memory is deeper than the man himself. It surpasses him. Besides, people by their very nature tend to forget; remembering is the exception rather than the rule.

In the short story “Funes, the Memorious” the great Argentine writer Jorge Luis Borges describes the tragic life of a person who never forgets anything, thus highlighting the decisive importance of the processes of oblivion for a healthy and balanced human life. It is precisely this gap that technological development has come to cover, by creating a website where information is kept intact erasing the process of oblivion. The right to be forgotten was adopted on the one hand in order to defend the protection and, on the other hand, to establish control over the personal data of individuals. Although there are cases where this right has been implemented, its exact content has not yet been clarified. In addition, it is a fact that this right also conflicts with other known and established rights, creating even greater need for its analysis and clarification.

What is the right to be forgotten?

The right to oblivion is defined as “the right not to refer to past events that belong to the past and are no longer relevant”. We would think that this right applies mainly to the mass media and is understood as the right of the person not to be subject to journalistic interest and commentary on past situations in his life. This, of course, is considered to be reasonable because the opposite would increase the difficulty of reintegrating the individual into society if, for example, they were using journalistic means for committing criminal offenses. This is not an absolute right; a fair balance must be found when there is a legitimate public interest in information. Of course, it is not clear when there is a reason for legitimate interest.

The example of HIV positive women

The case of HIV-positive women in April 2012 is remarkable. During massive checks conducted by the police, women were subject to forced HIV tests, accused of being prostitutes knowing that they have HIV and wanting to transmit the HIV virus to the alleged customers. At the same time, their photos and ID details were published, while it came out that they were HIV positive as well as the prosecution against them. This publication is alleged to have had a serious impact on the data subjects, perpetuated them permanently and potentially led to the suicide of some of them.

This data was made public because, as claimed, there was a legitimate public interest in information. Of course, it is remarkable that the publication, the continuous reproduction of the topic and the over-displaying of the photographs of the women brought the opposite results, as the men who had had sex with these women were not medically examined, fearing that they would also become targeted by the media. It therefore emerges that it is unclear whether and when there is indeed a public interest requirement requiring the publication of photographs and data to inform the public.

When it comes to the right to be forgotten, it is worth mentioning that in the international literature there is a variety of terms referring to this right, the right to forget and the right to be forgotten, the right to oblivion, or the right to delete.

The digital oblivion

By digital oblivion, we mean the right of individuals to stop the processing of their personal data, but also to delete them when they are not needed for legitimate purposes. The European Commission has recently requested clarification of the concept and made an initial effort to give its own (broad and vague) definition (above). Undoubtedly, this right means that personal information of a person must be irrevocably abolished. In 2008, Jonathan Zittrain also proposed a similar concept -called reputation bankruptcy- allowing people a “new start” on the Internet. It can be obvious that when a person withdraws its consent or expresses its wish to stop the processing of its personal data, the data must be irrevocably removed and removed from the data processor’s servers. However, this affair does not fit perfectly with the legal, economic and technical reality.

The GDPR includes detailed complicated provisions, which can cover a wide range of situations. The level of protection afforded to data subjects is also to be praised, especially as regards the rights of the data subject, such as the right to oblivion, as this will contribute to the further protection of such data sets that are so sensitive as to adversely affect life of the data subject. These rights, some of which are novel, will contribute in the long run not only to improving the level of data protection for the data subjects but also to a large extent to the provision of free flow of information to promote the trust of data subjects on their security and hence the greater ease of doing business across the EU.

*Apollonia Ioannidou holds a Bachelor from the Law School of the Aristotle University of Thessaloniki and a Master from Panteion University, Faculty of Public Administration, Law, Technology and Economy track. She is currently attending a second Master in the Faculty Business for Lawyers in Alba Business School.